Their model represents individual relationships one at a time, then combines these representations to describe the overall scene.

This enables the model to generate more accurate images from text descriptions, even when the scene includes several objects that are arranged in different relationships with one another.

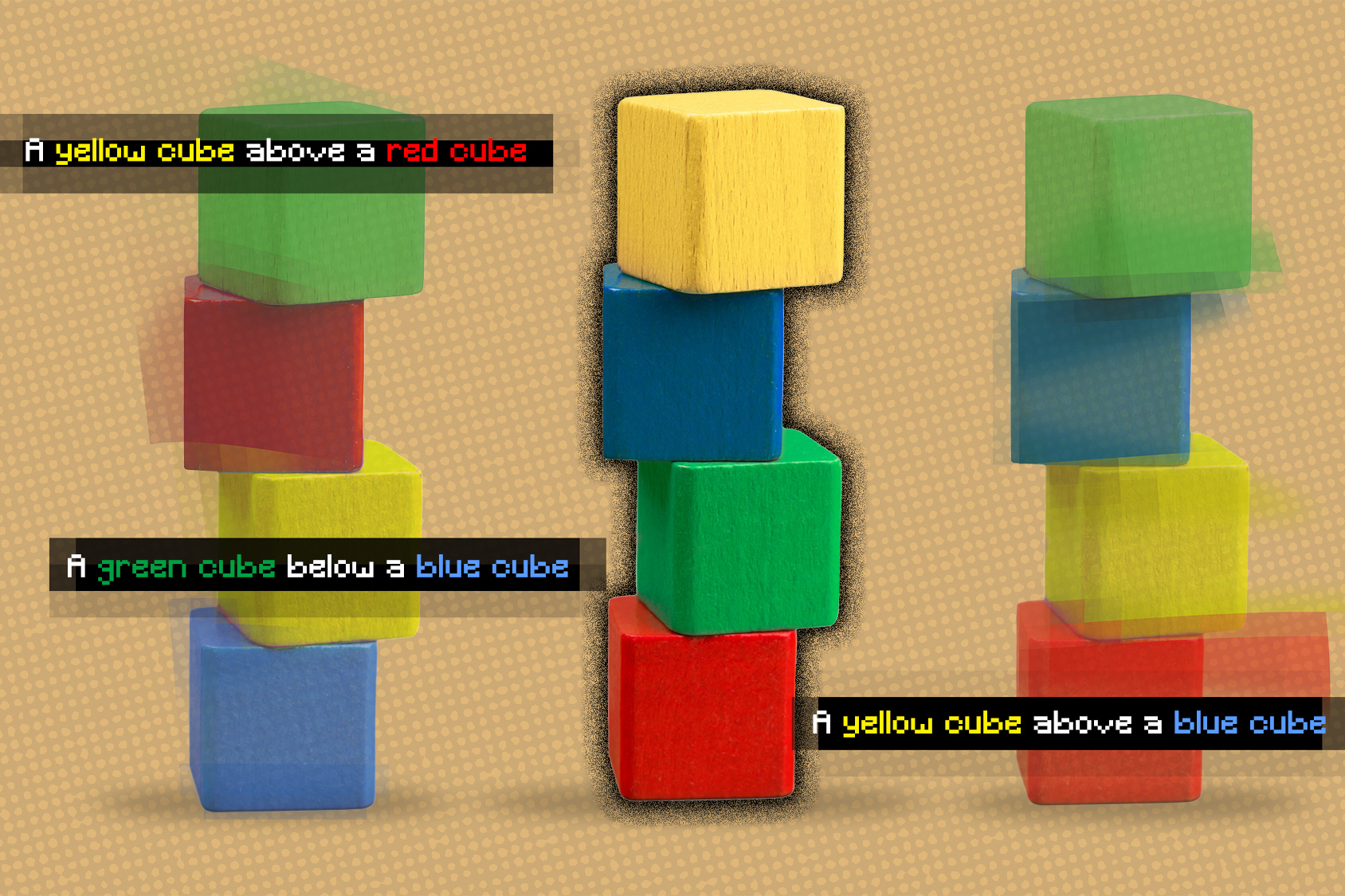

The framework the researchers developed can generate an image of a scene based on a text description of objects and their relationships, like “A wood table to the left of a blue stool.The researchers used a machine-learning technique called energy-based models to represent the individual object relationships in a scene description.However, such approaches fail when we have out-of-distribution descriptions, such as descriptions with more relations, since these model can’t really adapt one shot to generate images containing more relationships.

The system also works in reverse — given an image, it can find text descriptions that match the relationships between objects in the scene.In addition, their model can be used to edit an image by rearranging the objects in the scene so they match a new description.

The researchers compared their model to other deep learning methods that were given text descriptions and tasked with generating images that displayed the corresponding objects and their relationships.“One interesting thing we found is that for our model, we can increase our sentence from having one relation description to having two, or three, or even four descriptions, and our approach continues to be able to generate images that are correctly described by those descriptions, while other methods fail,” Du says.The researchers also showed the model images of scenes it hadn’t seen before, as well as several different text descriptions of each image, and it was able to successfully identify the description that best matched the object relationships in the image.And when the researchers gave the system two relational scene descriptions that described the same image but in different ways, the model was able to understand that the descriptions were equivalent.While these early results are encouraging, the researchers would like to see how their model performs on real-world images that are more complex, with noisy backgrounds and objects that are blocking one anotherThey are also interested in eventually incorporating their model into robotics systems, enabling a robot to infer object relationships from videos and then apply this knowledge to manipulate objects in the world